TIP Integration Overview: MISP

Install Enrichment Integration

Install From GitHub

Ensure that MISP is running the lastest commit from the misp-modules Github

Current GreyNoise Module VersionThe current version of the GreyNoise misp-module is v1.2. Ensure this version is enabled in your MISP instance to use the features outlined below.

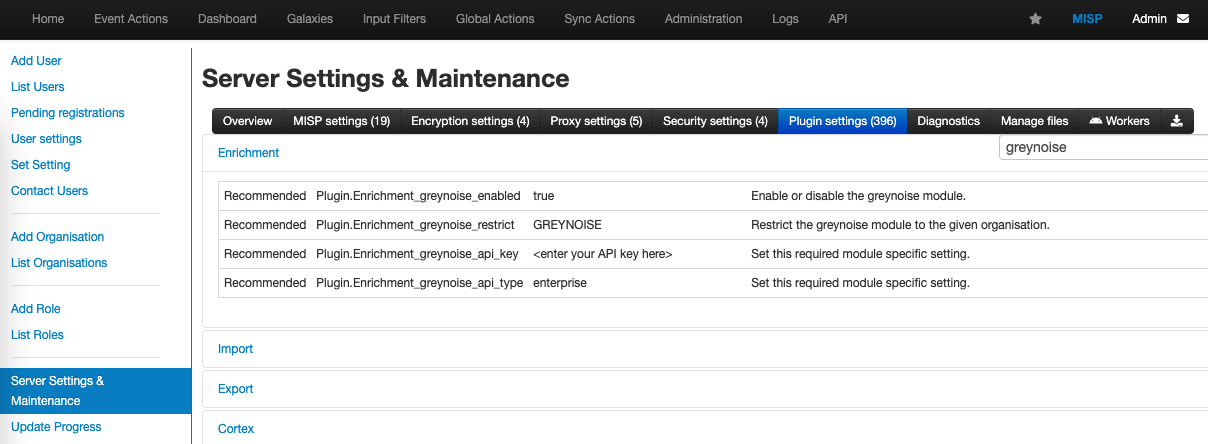

Configure Plugin Settings

Navigate to the Server Settings & Maintenance menu in MISP, then select Plugin Settings. Expand the Enrichment section and search for "greynoise".

Settings:

- Plugin.Enrichment_greynoise_enabled = set to true

- Plugin.Enrichment_greynoise_restrict = select an Org if you wish to restrict access

- Plugin.Enrichment_greynoise_api_key = enter a GreyNoise API Key

- Plugin.Enrichment_greynoise_api_type = enter

enterpriseorcommunitypending on API Key type

Enter GreyNoise module settings to enable the module.

Performing an Enrich IP Lookup

Enrich Action requires v1.2 of the module and greynoise-ip objectIn order for the GreyNoise enrich action to return data on each event, v1.2 of the module needs to be installed, and the greynoise-ip Object needs to be installed: https://github.com/MISP/misp-objects/tree/main/objects/greynoise-ip

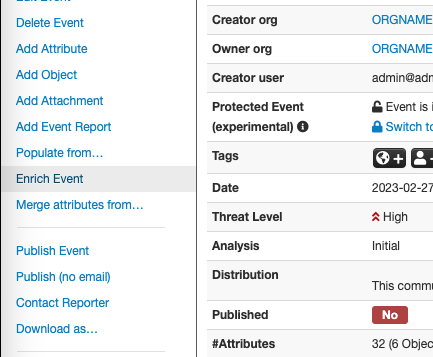

From the Event Details page, select the Enrich Event option.

Event details page, Enrich Event function.

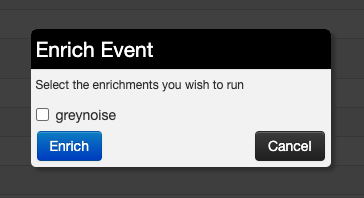

From the list of available enrichments, select the greynoise option then push the enrich button.

Enrichment selection dialog box.

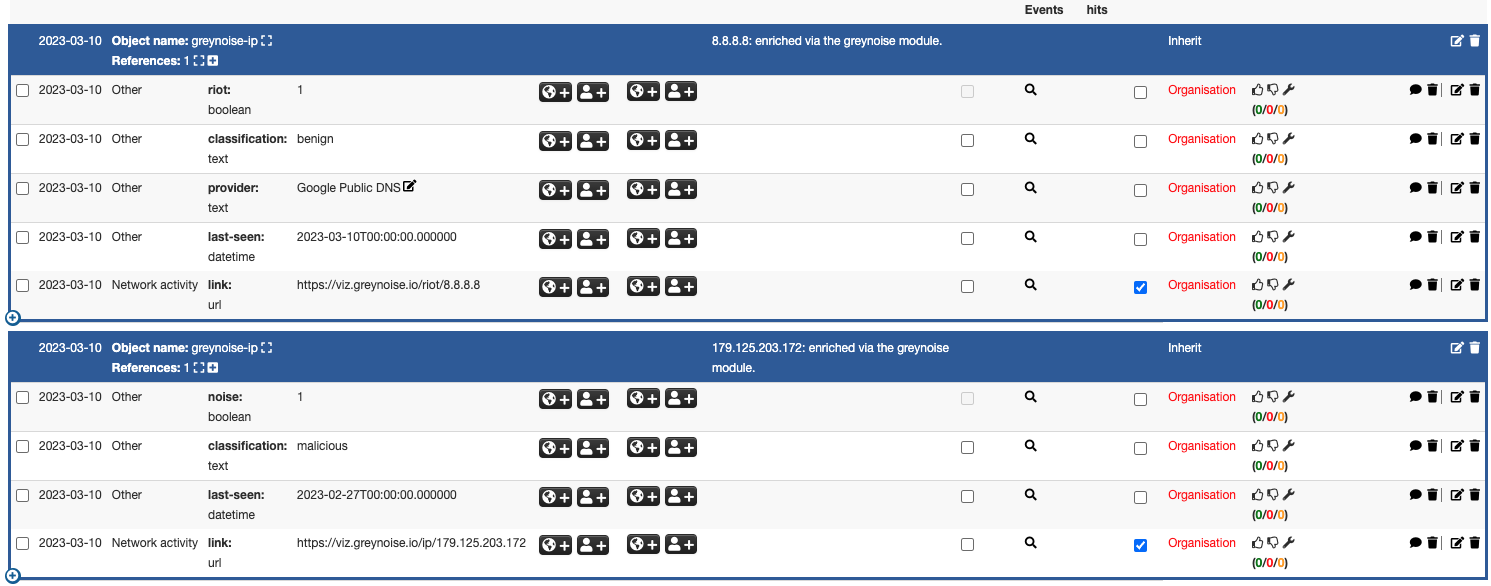

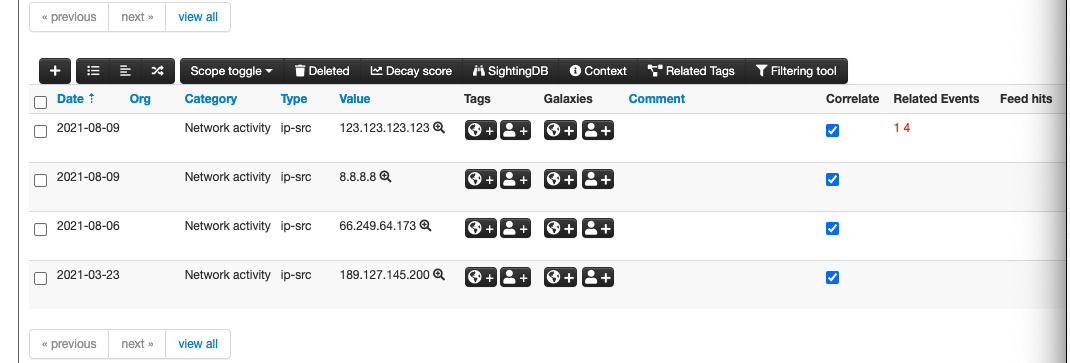

Once the enrichment process finishes, each IP on the event will contain the greynoise-ip enrichment information. Additional details on an IP can be found by using the Hover enrichment below.

GreyNoise enrichment data output.

Performing an Hover IP Lookup

From the Event Details view, select the magnifying glass icon next to an IP indicator to pull details from GreyNoise on that IP.

Click the magnifying glass next to the IP indicator to query the GreyNoise module.

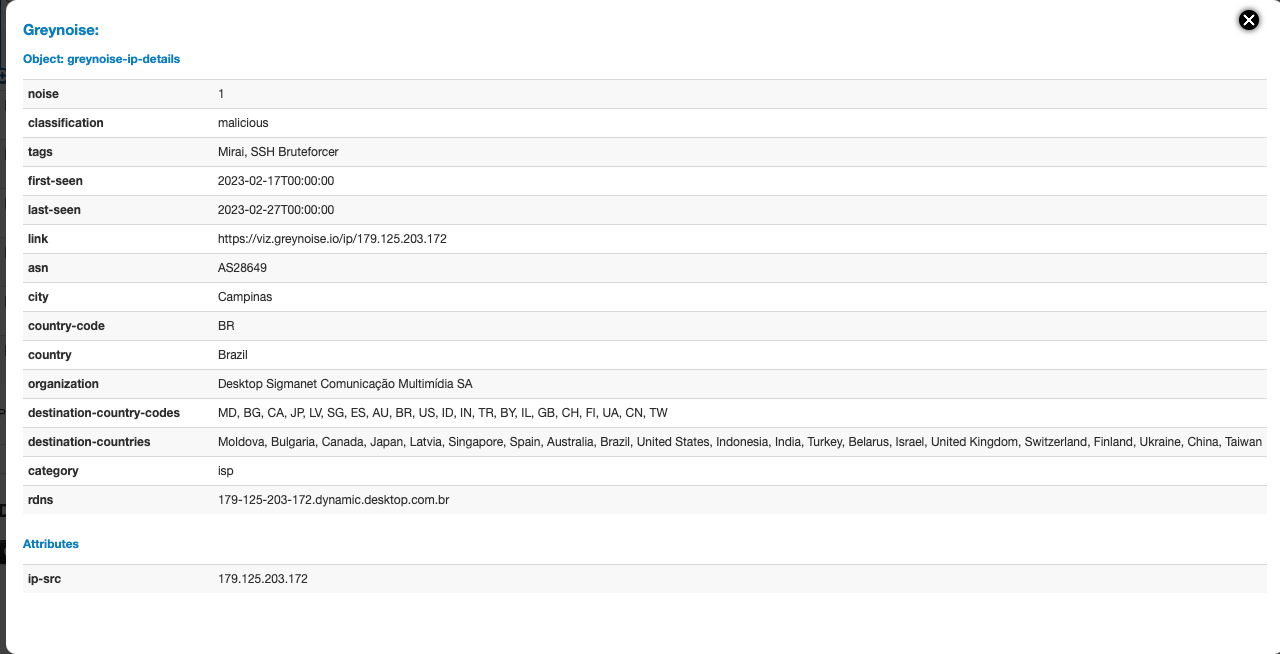

IP Response with Enterprise (Paid) API Enabled

GreyNoise IP Details from Enterprise (Paid) API

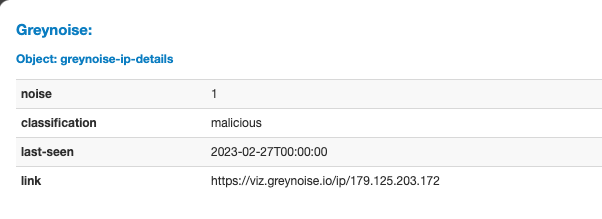

IP Response with Community (Free) API Enabled

GreyNoise IP Details from Community (Free) API

Indicator must be of type "ip-src" or "ip-dst'When adding an IP indicator as an attribute to an event, the attribute must be of type "ip-src" or "ip-dst" for the module to function.

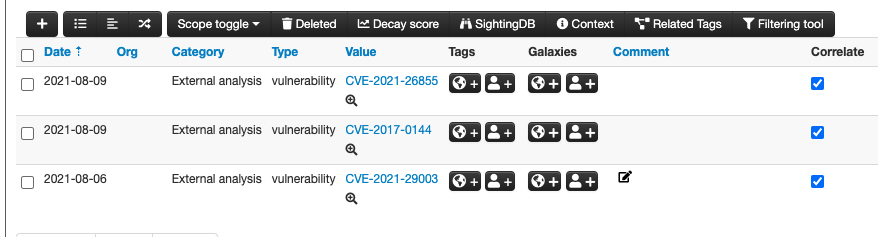

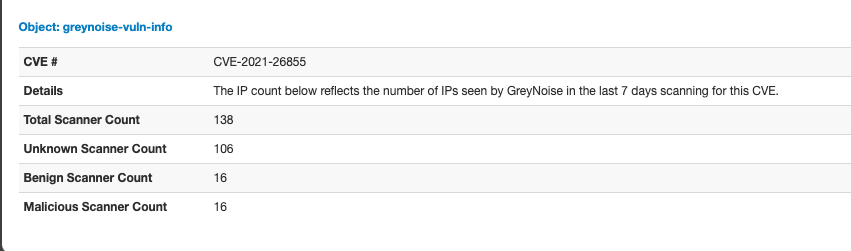

Performing an Hover CVE Query

From the Event Details view, select the magnifying glass icon next to a CVE indicator to pull details from GreyNoise on that CVE. Scanning details for the last 7 days are displayed.

Click the magnifying glass next to the CVE indicator to query the GreyNoise module.

Indicator must be of type "vulnerability"When adding a CVE indicator as an attribute to an event, the attribute must be of type "vulnerability" for the module to function.

CVE Lookup Requires Enterprise (Paid) API AccessThe CVE query function of the module will only work when an Enterprise (Paid) API Key and the "enterprise" API Key Type are enabled in the module settings. Those users with Community level access will only have access to the IP lookup functionality.

Install Feed Script Integration

GreyNoise does not currently support the official method used by MISP to pull in a list of indicators as a feed. However, the below steps allow for this to be accomplished by:

- Installing Python Script to collect indicators to a file on the MISP host

- Setting up a CRON job to run the script daily

- Configuring a Freetext Parsed Feed from local File

Creating and testing the Python Script

The following script requires the GreyNoise python module to be installed on the local system along with a supported version of python3.

To install the module, run:

pip3 install greynoiseCreate a folder for the GreyNoise python script and output files:

mkdir /home/misp/greynoiseIn the folder, create a file name greynoise-misp-feed.py and use the following content:

import datetime

import os

import logging

import threading

from concurrent.futures import ThreadPoolExecutor, as_completed

from typing import Dict, List, Optional

# This script requires version 3.0.0 or higher of the greynoise python library

from greynoise.api import GreyNoise, APIConfig

# Configure logging

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(threadName)s - %(levelname)s - %(message)s'

)

logger = logging.getLogger(__name__)

def get_greynoise_session() -> Optional[GreyNoise]:

"""Initialize and return a GreyNoise session with error handling."""

try:

GN_API_KEY = os.environ.get("GN_API_KEY")

if not GN_API_KEY:

logger.error("GN_API_KEY environment variable not set")

return None

api_config = APIConfig(api_key=GN_API_KEY, integration_name="misp-feed-script-v2.0")

session = GreyNoise(api_config)

return session

except Exception as e:

logger.error(f"Failed to initialize GreyNoise session: {e}")

return None

def get_output_filename(query: str) -> str:

"""Determine the output filename based on the query type."""

if "benign" in query:

return "gn_feed_benign.txt"

elif "malicious" in query:

return "gn_feed_malicious.txt"

elif "suspicious" in query:

return "gn_feed_suspicious.txt"

else:

return "gn_feed_other.txt"

def process_query(query: str) -> Dict[str, any]:

"""Process a single query and return results with error information."""

result = {

"query": query,

"success": False,

"error": None,

"ip_count": 0,

"filename": get_output_filename(query)

}

logger.info(f"Starting to process query: {query}")

# Get GreyNoise session

session = get_greynoise_session()

if not session:

result["error"] = "Failed to initialize GreyNoise session"

return result

try:

# Open output file

with open(result["filename"], "w") as file:

logger.info(f"Querying GreyNoise API for: {query}")

# Initial query

response = session.query(query=query, exclude_raw=True, size=10000)

# Check if response is valid

if not response or "request_metadata" not in response:

result["error"] = "Invalid response from GreyNoise API"

return result

count = response["request_metadata"].get("count", 0)

data = response.get("data", [])

if count == 0 or len(data) == 0:

logger.warning(f"No data returned for query: {query}")

result["success"] = True # This is not an error, just no data

return result

logger.info(f"Processing {count} IPs for query: {query}")

# Process first page

for item in data:

if "ip" in item:

file.write(str(item["ip"]) + "\n")

result["ip_count"] += 1

# Process subsequent pages

scroll = response["request_metadata"].get("scroll")

complete = response["request_metadata"].get("complete", True)

while scroll and not complete:

logger.info(f"Fetching next page for query: {query}")

try:

response = session.query(query=query, scroll=scroll, exclude_raw=True, size=10000)

if not response or "data" not in response:

logger.warning(f"Invalid response for scroll query: {query}")

break

data = response["data"]

for item in data:

if "ip" in item:

file.write(str(item["ip"]) + "\n")

result["ip_count"] += 1

scroll = response["request_metadata"].get("scroll", "")

complete = response["request_metadata"].get("complete", True)

except Exception as e:

logger.error(f"Error during scroll query for {query}: {e}")

break

result["success"] = True

logger.info(f"Successfully processed {result['ip_count']} IPs for query: {query}")

except Exception as e:

error_msg = f"Error processing query '{query}': {e}"

logger.error(error_msg)

result["error"] = error_msg

return result

def main():

"""Main function to process all queries concurrently."""

queries = [

"classification:benign last_seen:1d",

"classification:malicious last_seen:1d",

"classification:suspicious last_seen:1d"

]

logger.info(f"Starting processing of {len(queries)} queries with threading")

# Process queries concurrently using ThreadPoolExecutor

with ThreadPoolExecutor(max_workers=len(queries), thread_name_prefix="QueryWorker") as executor:

# Submit all queries

future_to_query = {executor.submit(process_query, query): query for query in queries}

# Collect results as they complete

results = []

for future in as_completed(future_to_query):

query = future_to_query[future]

try:

result = future.result()

results.append(result)

if result["success"]:

logger.info(f"Query '{query}' completed successfully with {result['ip_count']} IPs")

else:

logger.error(f"Query '{query}' failed: {result['error']}")

except Exception as e:

logger.error(f"Unexpected error processing query '{query}': {e}")

results.append({

"query": query,

"success": False,

"error": f"Unexpected error: {e}",

"ip_count": 0,

"filename": get_output_filename(query)

})

# Summary

successful_queries = [r for r in results if r["success"]]

failed_queries = [r for r in results if not r["success"]]

total_ips = sum(r["ip_count"] for r in results)

logger.info("=" * 50)

logger.info("PROCESSING SUMMARY")

logger.info("=" * 50)

logger.info(f"Total queries processed: {len(results)}")

logger.info(f"Successful queries: {len(successful_queries)}")

logger.info(f"Failed queries: {len(failed_queries)}")

logger.info(f"Total IPs processed: {total_ips}")

if failed_queries:

logger.error("Failed queries:")

for result in failed_queries:

logger.error(f" - {result['query']}: {result['error']}")

logger.info("Processing complete!")

if __name__ == "__main__":

main()import datetime

import os

import logging

# This script requires version 2.3.0 or lower of the greynoise python library

from greynoise import GreyNoise

GN_API_KEY = os.environ.get("GN_API_KEY")

session = GreyNoise(api_key=GN_API_KEY, integration_name="misp-feed-script-v1")

queries = ["classification:benign last_seen:1d", "classification:malicious last_seen:1d"]

error = ""

for query in queries:

print(f"Building indicator list for query: {query}")

if "benign" in query:

file_name = open("gn_feed_benign.txt", "w")

elif "malicious" in query:

file_name = open("gn_feed_malicious.txt", "w")

else:

file_name = open("gn_feed_other.txt", "w")

print(f"Outputting to file: {file_name}")

try:

print("Querying GreyNoise API")

response = session.query(query=query, exclude_raw=True)

except Exception as e:

error = f"GreyNoise API connection failure, error {e}"

print(error)

if response["count"] == 0 or len(response["data"]) == 0:

error = "GreyNoise API query returned no data"

print(error)

else:

data = response["data"]

print("Processing first page of query results")

scroll = response["scroll"]

for item in data:

file_name.write(str(item["ip"]) + "\n")

while scroll:

print("Querying for next page of results")

response = session.query(query=query, scroll=scroll, exclude_raw=True)

data = response["data"]

print("Processing next page of results")

for item in data:

file_name.write(str(item["ip"]) + "\n")

scroll = response["scroll"] if "scroll" in response else False

Update Feed SelectionBy default, the above script collects both the benign and malicious feeds. Ensure that an appropriate scription is inplace for your account or update the query list to only include the appropriate feed query, as noted: Using GreyNoise as a Feed

The script relies on your GreyNoise API key to be set as an environment variable with the key GN_API_KEY so be sure to set it using the following:

export GN_API_KEY="your-key-here"Test the script by running the following command:

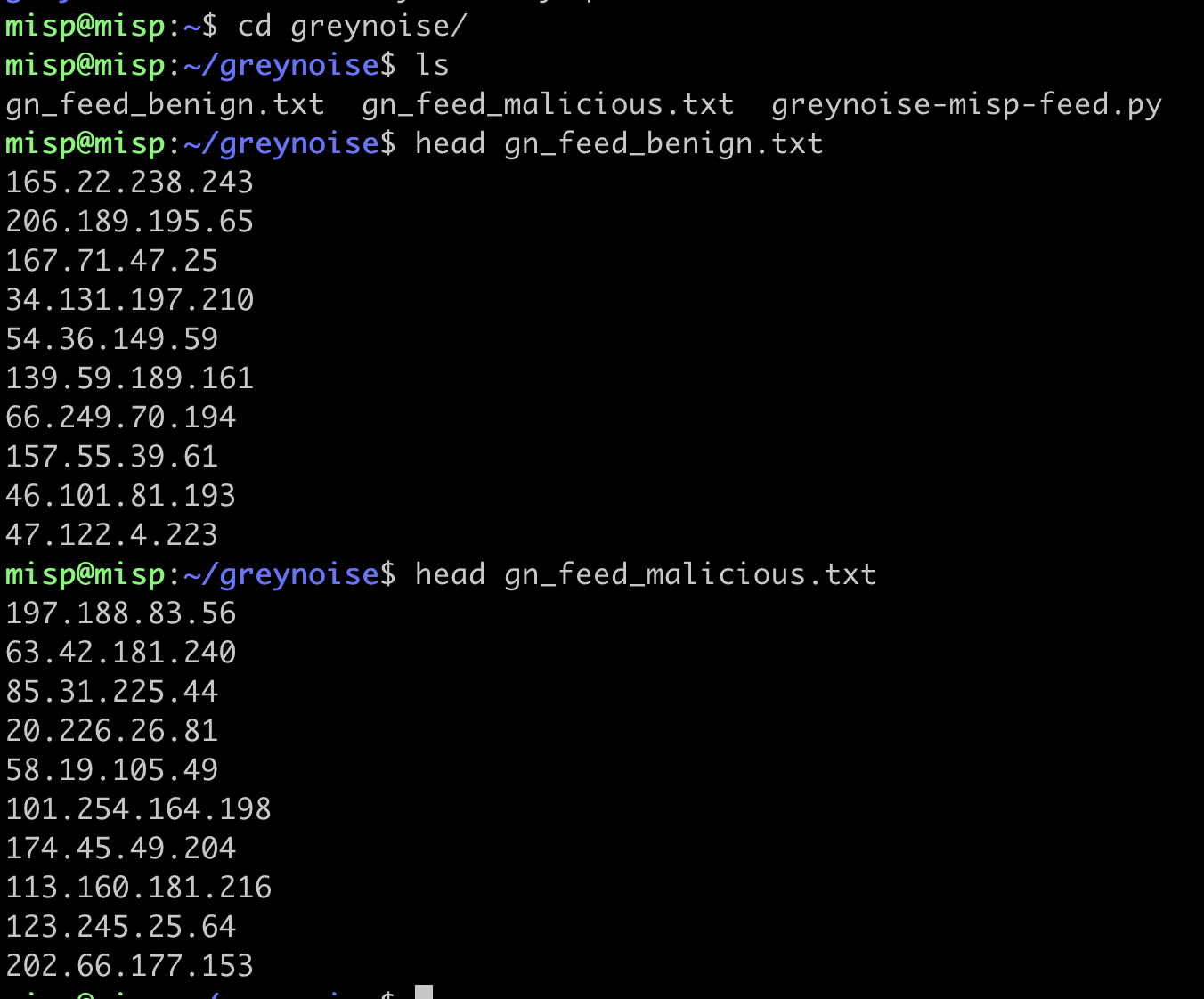

python3 greynoise-misp-feed.pyIf the script is working correctly, an output file will be created with the list of IPs. A separate file will be created for the benign vs. malicious feed list:

Creating the daily execution schedule

To have the file(s) updated daily, create a CRON job to run the script on a schedule:

crontab -e

Add to the file:

0 23 * * * /usr/bin/python3 /home/misp/greynoise/greynoise-misp-feed.py

This will run the script at 11 PM UTC every dayCreating the feed import in the MISP UI

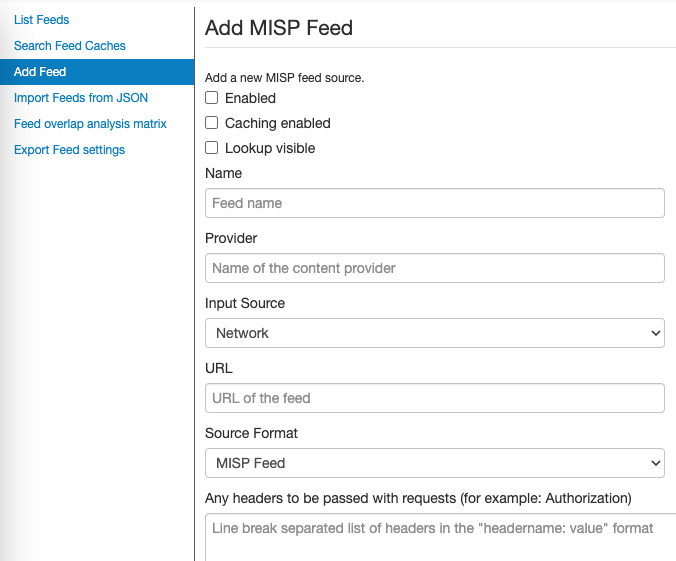

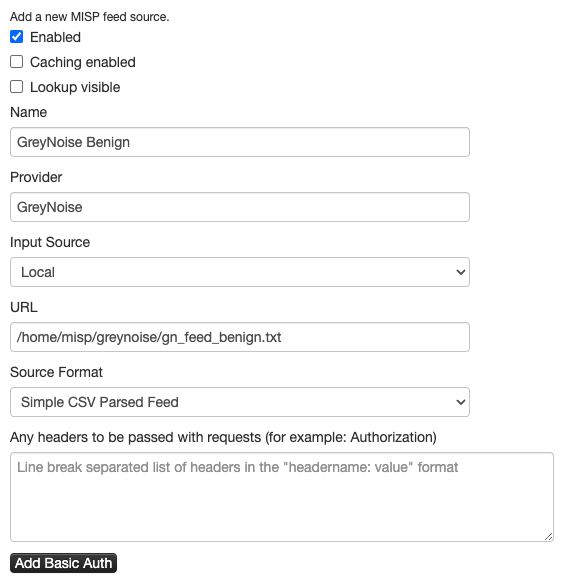

Within the MISP UI, go to the Sync Actions menu and select List Feeds

Use the Add Feed option from the right navigation bar:

Configure the Feed with the following settings (replace benign with malicious when/where necessary):

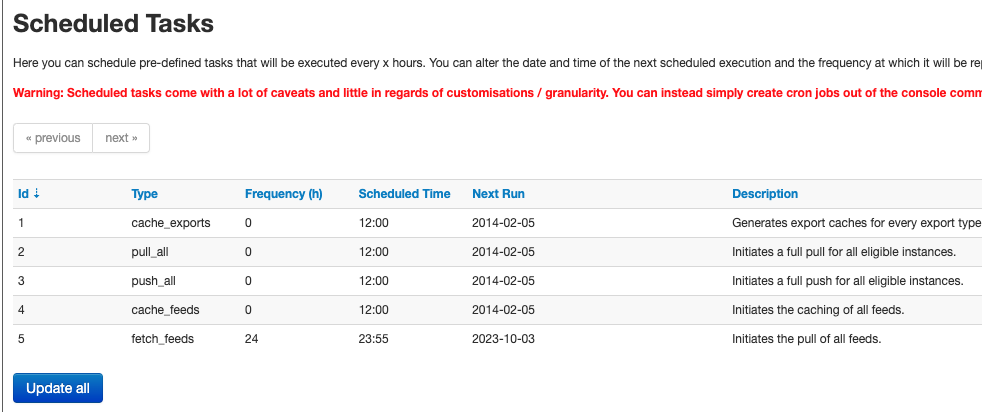

Scheduling the daily import into MISP

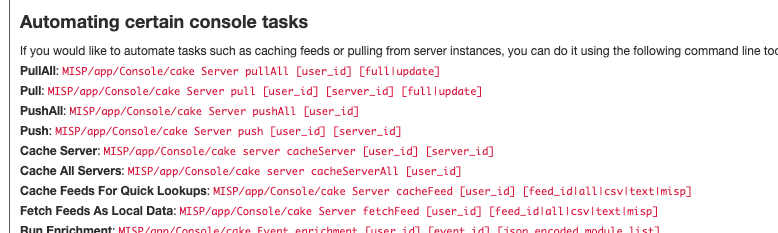

MISP provides two methods to auto-import feeds:

- Use the Scheduled Tasks option. However, this will import all feeds that are enabled on the system.

- Use the console automation commands to run the Fetch Feed command on the system, using something similar to crontab:

/var/www/MISP/app/Console/cake Server fetchFeed 1 74

Updated 9 months ago